notice

This is documentation for Rasa X Documentation v0.42.x, which is no longer actively maintained.

For up-to-date documentation, see the latest version (1.1.x).

Track Your Progress

Identifying successful conversations is an important step to understand what’s working and what’s not. Using this measure, you can monitor how your assistant improves as you fix bugs and make changes.

Rasa X is built to help you track your progress by tagging conversations.

Tagging Conversations via the API

For consistent progress tracking, you should find a way to automatically identify conversations that are likely to have been successful. How you do this depends on the use case of your assistant. For example, a few good indicators might be:

a user signed up for your service

a user did not contact support again within 24 hours

You can use the Rasa X API to automatically tag those conversations when those actions do or don’t occur. Here are a couple concrete examples:

Carbon bot tags conversations using the Rasa X API when a user clicks a link to purchase carbon offsets

Sara tags conversations using the Rasa X API when a user indicates whether or not they found the docs search results helpful

Tags can be applied to conversations using a

POST call

to the Rasa X REST API (make sure to replace {HOST} and {conversation_id} with your own values):

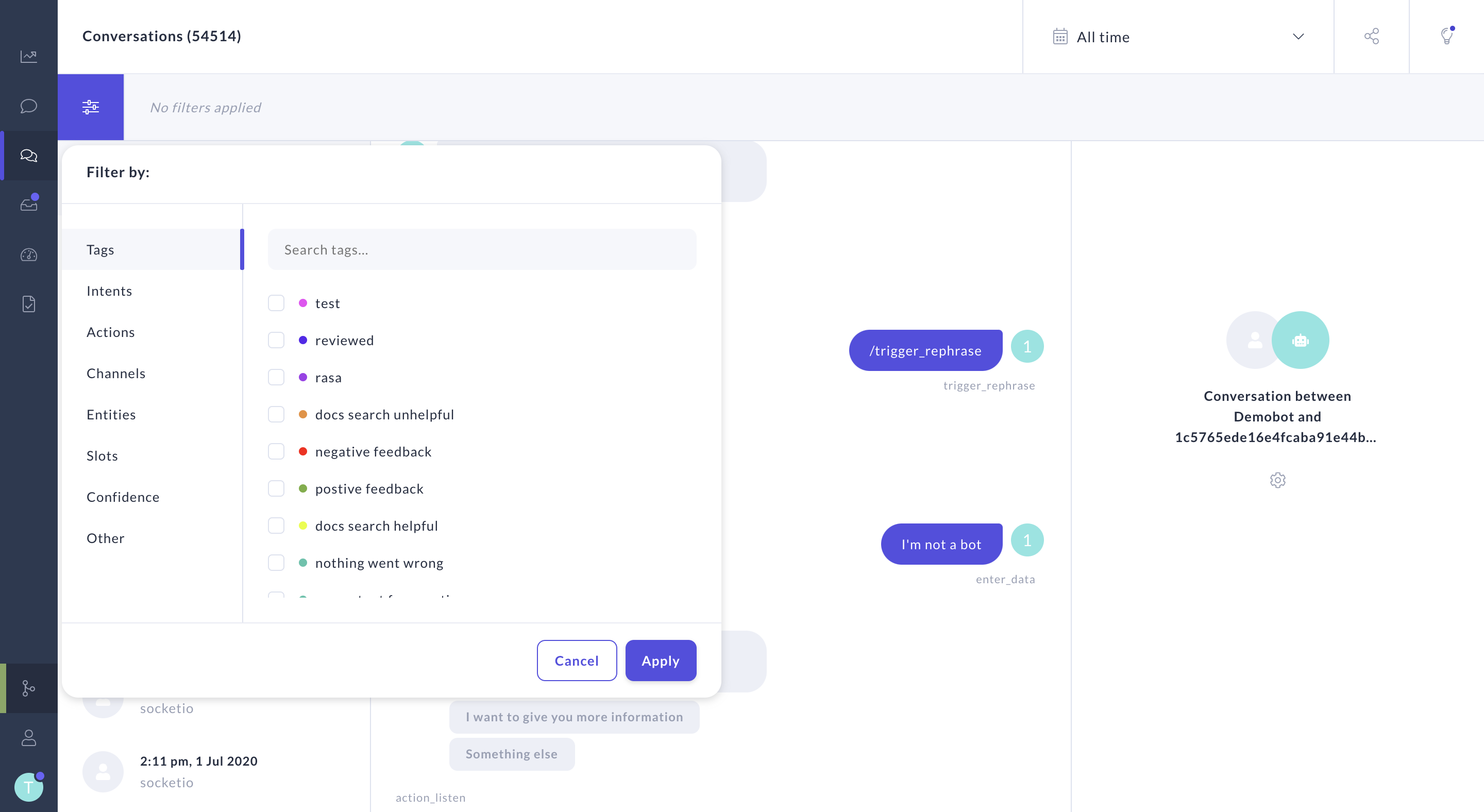

You can then focus on those specific conversations by filtering for that tag:

You can also delete a tag, removing it from all conversations, using a DELETE call to the Rasa X API.

Tagging Conversations in the UI

You can tag conversations in the Rasa X UI during the review process to identify successful conversations. Tags can also be used to identify specific problems with your assistant’s conversations, such as an intent misclassification.

In Rasa X, tags are applied by selecting a conversation in the Conversations page and using the gear icon on the right to select a tag.

You can speed up the process of manually tagging conversations as successes or failures by filtering for conversations where certain events happened. Here are some ideas:

Filter by intent or action confidence to see conversations that include low prediction values

Filter by a fallback action to see where your assistant was not able to move forward

Filter for a specific slot to see if users are filling out a form

Filter for positive or negative intents in feedback flows